I’ve been running some real-world tests to understand how much time the automated support tools can save. The first case I looked into was a small automated test we added some time ago to our internal tooling.

Before automation, this was a mid-complex process, not the hardest one to be honest, as it was very well documented in the internal processes. In theory, with this doc, anyone in the team was supposedly able to do it. But since the test involved WP Admin + FTP access, editing plugin core files, and adding error logs, in reality, it was usually handled by Tier 2 and more senior teammates.

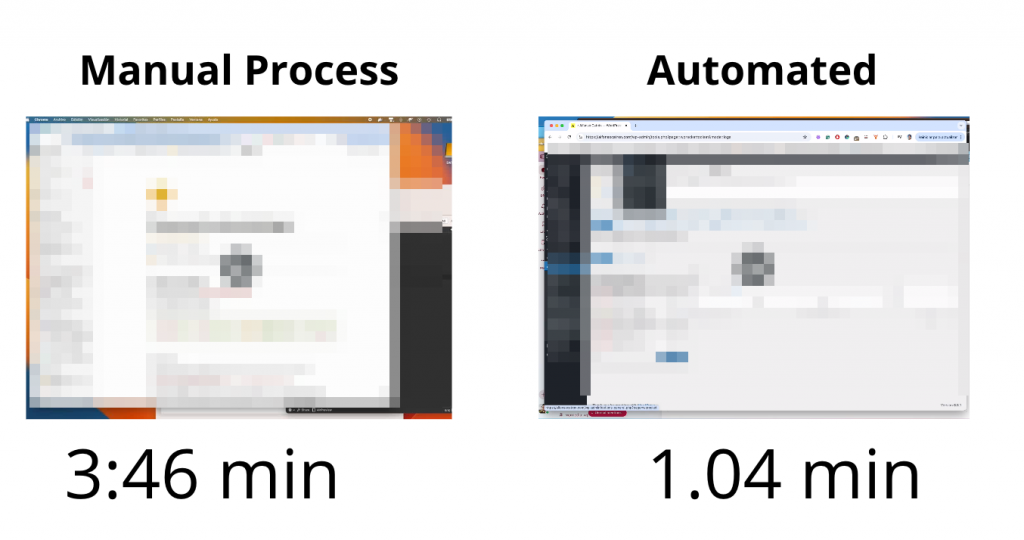

I’ve always been curious about “how much time these tests save in reality”… is it worth it? So I compared the old manual method with the automated one. My approach was not super scientific: I recorded the screen twice, once while doing the old process, and once using the tool.

Results

- Manual process: 3:46 minutes

- Automated with a checkbox: 1:04 minutes

- ⏱️ Time saved: 2:42 minutes

- 📈 Improvement: ~70% faster

The real impact? Well, that goes beyond the numbers

By automating this single task, we didn’t just save time: we made a complex technique accessible to everyone, regardless of role or seniority.

This also simplifies the onboarding for new teammates, reduces friction, and removes unnecessary dependencies. And that’s the kind of impact that doesn’t show up on a clock and you can’t measure.

If you’re into tooling, internal automation, and measuring impact, I’m planning to share more of these mini reports as we go. Stay tuned.

Leave a Reply